Machine Learning & AI

Editorial Team

12 Nov 2025

In 2023, a healthcare algorithm used by hospitals across America was found to systematically discriminate against Black patients, denying them critical care that white patients with identical health conditions received. The algorithm wasn't programmed to be racist. It simply learned from historical data that reflected existing healthcare disparities. This is the hidden danger of AI bias, and it's why AI ethics has become one of the most critical conversations in technology today.

As artificial intelligence systems make increasingly important decisions affecting millions of lives, from determining who gets hired to who receives medical treatment, understanding AI ethics and bias isn't just an academic exercise. It's a fundamental responsibility for anyone building, deploying, or using AI systems. The algorithms we create today will shape society for generations to come.

Ethical AI isn't about slowing down innovation. It's about ensuring that as AI becomes more powerful, it becomes more fair, transparent, and accountable. The question isn't whether we should build AI, but how we build it responsibly. This comprehensive guide explores what AI ethics means, examines real-world examples of AI bias, identifies critical AI ethics issues, and provides practical steps for building responsible AI systems.

Understanding AI Ethics: What It Really Means

AI ethics refers to the moral principles and guidelines that govern the development, deployment, and use of artificial intelligence systems. It encompasses questions about fairness, accountability, transparency, privacy, and the broader societal impact of AI technologies. Ethical AI ensures that artificial intelligence systems benefit humanity while minimizing potential harms and respecting fundamental human rights and values.

The field of AI ethics addresses profound questions that technology alone cannot answer. Should AI systems be allowed to make life-or-death decisions? Who bears responsibility when an autonomous vehicle causes an accident? How do we ensure AI doesn't amplify existing societal inequalities? These questions require thoughtful consideration of values, rights, and the kind of future we want to create.

Responsible AI development demands more than just technical excellence. It requires understanding how AI systems impact real people in real situations. A facial recognition system that works perfectly in lab conditions but fails to recognize darker skin tones in practice isn't just technically flawed, it's ethically problematic. Ethical AI must work fairly and safely for everyone, not just the people who built it.

The importance of AI ethics grows as AI systems gain autonomy and influence. When AI recommends medical treatments, determines creditworthiness, influences judicial sentencing, or controls critical infrastructure, the stakes become existential. Ethical AI frameworks help ensure these powerful systems align with human values and serve the common good rather than narrow interests.

What Is AI Bias and Why Does It Matter?

AI bias occurs when artificial intelligence systems produce systematically unfair outcomes that discriminate against certain groups of people. Unlike human prejudice, algorithmic bias often appears objective because it's generated by mathematical models and data analysis. This perceived objectivity makes AI bias particularly insidious, as people tend to trust automated decisions without questioning underlying assumptions or training data.

Bias in AI emerges from multiple sources throughout the development lifecycle. Training data bias happens when datasets used to teach AI systems don't represent reality accurately or fairly. If a hiring algorithm trains primarily on resumes from successful male employees, it learns to favor male candidates. Algorithmic bias can also stem from how problems are framed, which features are prioritized, and how success is measured.

The impact of AI bias extends far beyond individual inconvenience. Biased AI systems in criminal justice have led to harsher sentences for minority defendants. Discriminatory algorithms in lending have denied mortgages to qualified applicants based on zip codes that correlate with race. Biased hiring AI has filtered out qualified women from job pools. These aren't hypothetical concerns. They're documented harms affecting real people's lives, livelihoods, and opportunities.

Understanding AI bias requires recognizing that bias isn't always intentional or obvious. Well-meaning developers can create biased systems without realizing it. Historical data often reflects past discrimination. Seemingly neutral criteria like "previous experience" or "neighbourhood stability" can encode systemic inequalities. Responsible AI requires actively identifying and mitigating these hidden biases rather than assuming algorithms are inherently fair.

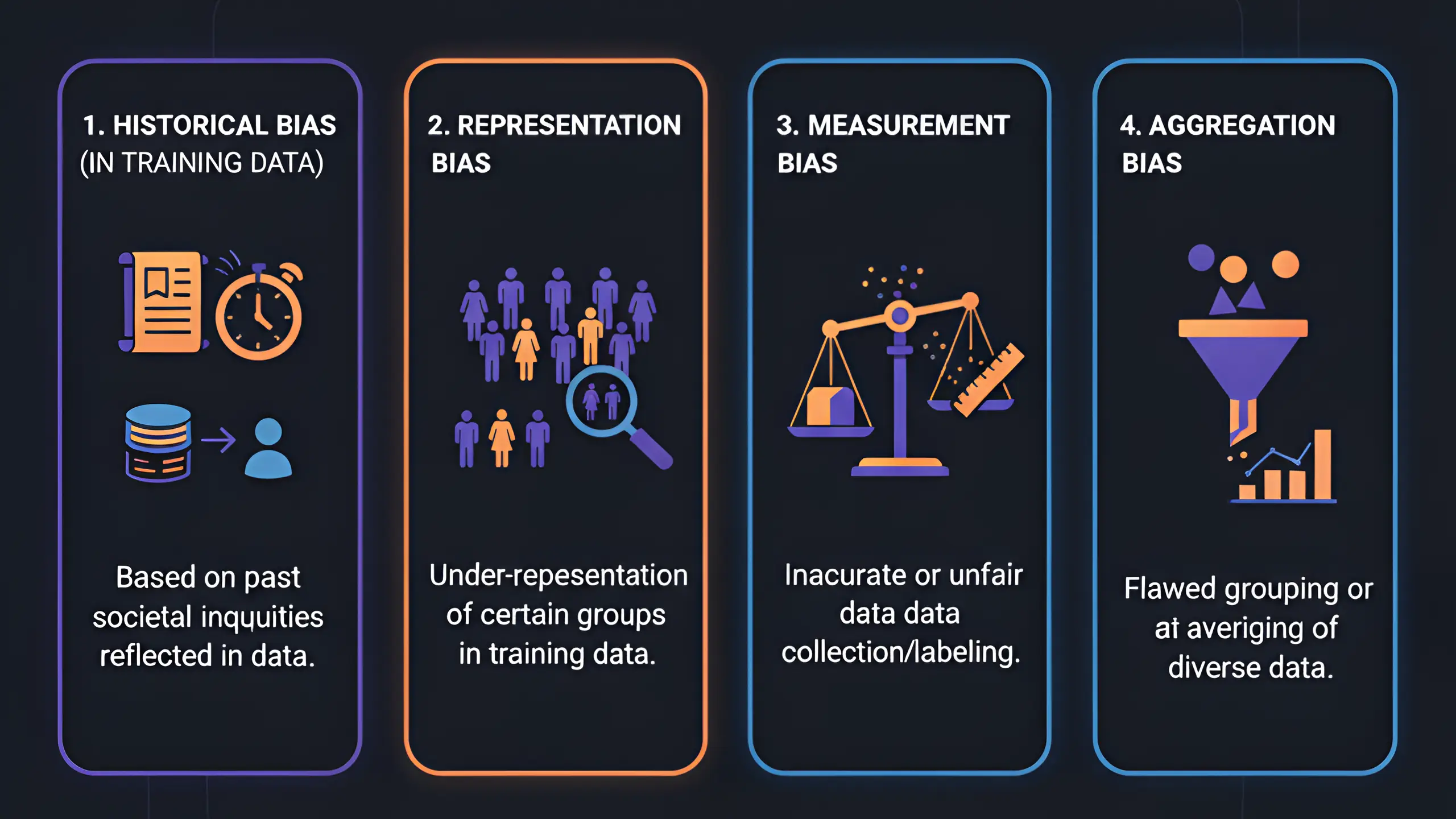

Types of AI Bias You Need to Know

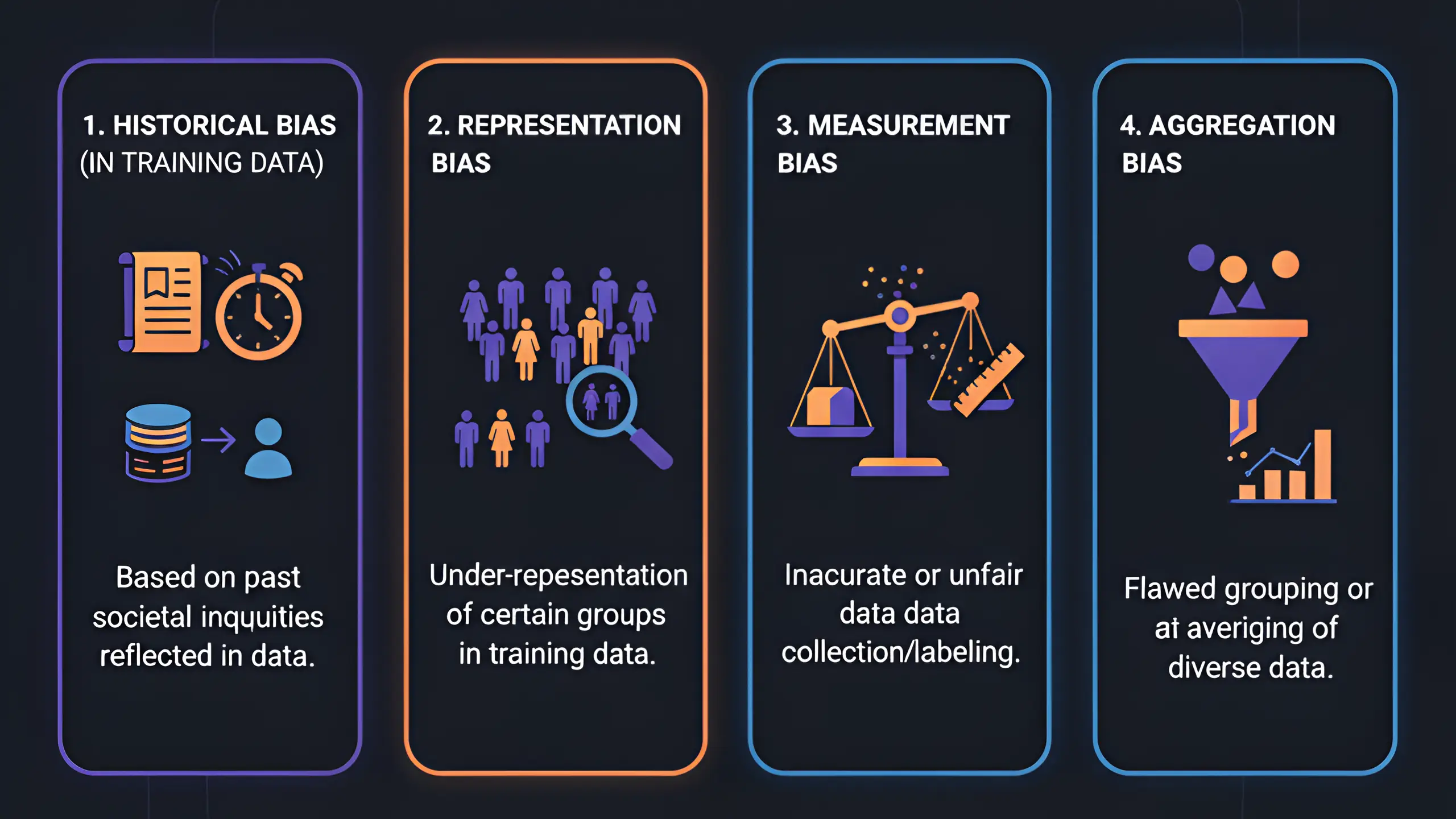

Historical Bias in Training Data

Historical bias occurs when training data reflects existing societal prejudices and inequalities. If an AI system learns from historical hiring decisions where women were systematically passed over for leadership roles, it learns to replicate that discrimination. The algorithm doesn't understand it's being unfair. It simply optimizes for patterns it observes in the data, perpetuating historical injustices into the future.

This type of bias in AI proves particularly challenging because the data itself is technically accurate. The problem isn't that the data is wrong, but that it accurately reflects an unjust past. Responsible AI development must recognize when historical accuracy shouldn't guide future decisions and actively work to correct these biases rather than amplify them.

Representation Bias

Representation bias happens when training datasets don't adequately represent all groups affected by the AI system. Facial recognition systems trained predominantly on white faces perform poorly on people with darker skin tones. Medical AI trained mostly on data from men makes less accurate diagnoses for women. This algorithmic bias leads to AI systems that work well for some populations but fail others.

The consequences of representation bias can be severe. Medical devices that monitor oxygen levels work less accurately on patients with darker skin. Voice recognition systems struggle with non-native accents. Biased AI creates a two-tiered reality where technology serves some people well while failing others, often those already disadvantaged by other societal factors.

Measurement Bias

Measurement bias emerges when the wrong proxy measures are used to predict desired outcomes. A common example involves using arrest rates to predict crime risk. Arrest rates reflect policing patterns as much as actual crime, meaning areas with heavier policing show more arrests regardless of actual crime levels. AI systems using arrest data as training input learn to predict policing patterns, not public safety risks, creating a feedback loop that directs more policing to already over-policed communities.

This type of AI bias demonstrates how technically sound systems can produce ethically problematic results. The algorithm works as designed, optimizing the chosen metrics. The problem lies in assuming those metrics accurately capture what we actually care about. Ethical AI requires careful consideration of what we measure and whether those measurements align with our true objectives.

Aggregation Bias

Aggregation bias occurs when a single model is applied to diverse groups with different characteristics. A diabetes risk prediction model might work well on average but perform poorly for specific demographic subgroups with different risk factors. Healthcare AI trained on aggregated data might miss important variations between populations, leading to inappropriate treatment recommendations.

Responsible AI addresses aggregation bias by developing group-specific models or ensuring systems adapt to individual characteristics rather than forcing everyone through the same one-size-fits-all algorithm. Recognizing that diversity requires diverse approaches is fundamental to ethical AI development.

Real-World AI Bias Examples That Changed Everything

Amazon's Hiring Algorithm

Amazon developed an AI recruiting tool to screen resumes and identify top candidates. The system trained on ten years of historical hiring data, which predominantly featured male employees in technical roles. The biased algorithm learned to penalize resumes containing the word "women's" (as in "women's chess club captain") and downgrade graduates from all-women's colleges. Amazon discovered the bias and scrapped the system, but the incident highlighted how AI bias can emerge even at the world's most sophisticated tech companies.

This example of AI bias demonstrates that good intentions and technical expertise don't automatically produce fair outcomes. The engineers building the system weren't trying to discriminate. They simply used available data without recognizing how historical gender imbalances would corrupt the algorithm's learning. Ethical AI requires proactive bias detection, not reactive discovery after systems are deployed.

COMPAS and Criminal Justice

The COMPAS (Correctional Offender Management Profiling for Alternative Sanctions) algorithm predicts defendant recidivism risk to inform bail and sentencing decisions across the United States. Investigative reporting revealed that COMPAS exhibited significant racial bias, incorrectly flagging Black defendants as high risk at nearly twice the rate it mislabelled white defendants. Meanwhile, white defendants were more likely to be incorrectly classified as low risk.

This AI bias example proved particularly troubling because it affected fundamental rights and freedoms. When biased algorithms influence who stays in jail and who goes free, who receives harsh sentences and who gets second chances, algorithmic bias becomes a matter of justice itself. The COMPAS case galvanized the responsible AI movement and sparked urgent conversations about algorithmic accountability in high-stakes domains.

Healthcare Algorithm Disparities

A widely used healthcare algorithm that guided treatment decisions for millions of Americans showed dramatic racial bias. The system used healthcare costs as a proxy for health needs, assuming that patients requiring more expensive care were sicker. However, because of systemic inequalities in healthcare access, Black patients received less medical care and incurred lower costs than white patients with identical health conditions. The biased algorithm concluded they were healthier and needed less care, systematically denying Black patients access to programs designed for the chronically ill.

This example of bias in AI illustrates how seemingly neutral choices, like using cost as a metric, can encode devastating discrimination. The algorithm didn't consider race explicitly. The bias emerged from structural inequalities reflected in the proxy measurement. Fixing this AI bias required fundamentally rethinking how health needs are assessed, moving from cost-based to symptom-based predictions.

Facial Recognition Failures

Multiple studies have documented significant bias in commercial facial recognition systems. Research by MIT and Stanford found that facial analysis algorithms from major tech companies showed error rates of less than 1% for white men but up to 35% for dark-skinned women. This AI bias creates serious consequences when these systems are deployed for security, law enforcement, or identity verification.

The facial recognition bias stems primarily from training data bias. Many facial recognition systems were trained predominantly on white faces, giving them insufficient exposure to the full range of human skin tones and facial features. This algorithmic bias reinforces existing inequalities, as the people most likely to be misidentified are often those already facing disproportionate surveillance and suspicion.

Critical AI Ethics Issues Facing Technology Today

Privacy and Data Protection

AI ethics issues around privacy have intensified as AI systems require massive amounts of personal data for training and operation. AI-powered surveillance, behavioral prediction, and profiling raise fundamental questions about consent, autonomy, and the right to privacy. When AI systems can predict personal characteristics, behaviors, and preferences from seemingly innocuous data, traditional privacy protections become inadequate.

The ethical issue extends beyond individual privacy to collective harms. AI systems trained on personal data can identify patterns affecting entire communities. Predictive policing algorithms trained on neighbourhood data affect everyone in those areas, not just individuals who consented to data collection. Responsible AI requires new frameworks that protect both individual and collective privacy rights.

Transparency and Explainability

The "black box" problem represents a critical AI ethics issue. Many powerful AI systems, particularly deep learning models, make decisions through complex processes that even their creators struggle to explain. When AI denies a loan, recommends medical treatment, or influences judicial sentencing, affected individuals deserve to understand why. Algorithmic transparency is both an ethical imperative and a practical necessity for identifying and correcting bias.

Explainable AI seeks to make AI decision-making interpretable to humans. However, there's often a trade-off between model performance and interpretability. More complex models may be more accurate but less explainable. Navigating this tension while ensuring adequate transparency represents an ongoing ethical challenge in responsible AI development.

Accountability and Responsibility

When AI systems cause harm, determining accountability becomes complicated. Is the developer responsible? The organization deploying the system? The user? The AI itself? Traditional liability frameworks struggle with AI's distributed responsibility and autonomous decision-making. Establishing clear accountability for AI systems is essential for ethical AI, yet remains one of the field's most challenging issues.

The accountability problem becomes more acute as AI systems gain autonomy. Self-driving cars that cause accidents, medical AI that misdiagnoses patients, and algorithmic trading systems that trigger market crashes all raise questions about who bears responsibility. Responsible AI frameworks must clearly define accountability chains and ensure meaningful recourse for those harmed by AI decisions.

Job Displacement and Economic Impact

AI automation threatens significant job displacement across industries, raising profound ethical questions about economic justice and societal responsibility. While AI creates new opportunities, the benefits and costs distribute unevenly. Workers in routine cognitive and physical jobs face displacement, while those with technical skills benefit from new opportunities. AI ethics must address how society manages this transition fairly.

The ethical issue extends beyond individual job losses to broader economic structures. If AI dramatically increases productivity while concentrating benefits among capital owners and highly skilled workers, it could exacerbate inequality. Responsible AI deployment requires considering economic impacts and supporting affected workers through retraining, education, and safety nets.

Autonomous Weapons and Military AI

The development of autonomous weapons systems that can select and engage targets without human intervention represents one of the most serious AI ethics issues. These systems raise fundamental questions about human dignity, the laws of war, and the delegation of life-or-death decisions to machines. Many AI researchers and ethicists argue for international treaties banning fully autonomous weapons.

The ethical concerns include accountability for war crimes, the lowering of barriers to armed conflict, and the potential for AI arms races. When machines make targeting decisions, who bears moral responsibility for civilian casualties? Ethical AI development in military contexts requires robust human oversight and clear ethical boundaries on autonomous decision-making authority.

Environmental Impact

AI systems, particularly large language models and computer vision systems, require enormous computational resources that consume significant energy and contribute to carbon emissions. Training a single large AI model can produce as much carbon as five cars over their entire lifetimes. The environmental cost of AI represents an often-overlooked ethics issue that will grow as AI deployment expands.

Responsible AI must balance capability with sustainability. Developing more efficient algorithms, using renewable energy for computation, and considering environmental impact in AI design decisions are becoming ethical imperatives. As climate change accelerates, the environmental consequences of AI cannot be ignored in ethical frameworks.

Building Responsible AI: Practical Steps and Best Practices

Diverse and Representative Training Data

Creating fair AI starts with training data that accurately represents all populations the system will serve. This means actively seeking diverse datasets, identifying and correcting historical biases, and ensuring adequate representation of minority groups. Responsible AI development treats data collection as an ethical act, not just a technical requirement.

Practical steps include auditing existing datasets for demographic balance, supplementing underrepresented groups, and questioning whether historical data should guide future predictions. When complete representation isn't possible, acknowledging limitations and restricting deployment to populations adequately represented in training data demonstrates ethical responsibility.

Bias Testing and Auditing

Detecting AI bias requires systematic testing across demographic groups and use cases. Before deployment, AI systems should undergo rigorous bias audits that measure performance disparities across protected characteristics like race, gender, and age. These audits should examine not just overall accuracy but fairness metrics like equal opportunity, demographic parity, and individual fairness.

Ongoing monitoring is equally important. AI bias can emerge or worsen over time as systems interact with evolving data and environments. Responsible AI includes continuous testing, regular audits, and mechanisms for users to report suspected strong. Organizations should establish clear metrics for acceptable bias levels and take corrective action when thresholds are exceeded.

Transparency and Documentation

Ethical AI requires clear documentation of how systems work, what data they use, what decisions they make, and what limitations they have. Model cards and datasheets provide standardized formats for communicating this information to users, auditors, and affected individuals. Transparency enables accountability and helps identify potential ethical issues before they cause harm.

Documentation should include information about training data sources and demographics, model architecture and performance metrics, known limitations and failure modes, and intended use cases and inappropriate applications. This transparency allows downstream users to make informed decisions about whether and how to deploy AI systems responsibly.

Human Oversight and Control

Responsible AI maintains meaningful human control over high-stakes decisions. While AI can provide recommendations and analysis, humans should retain ultimate authority over decisions affecting fundamental rights, safety, and wellbeing. This "human-in-the-loop" approach ensures that contextual judgment, ethical reasoning, and accountability remain with people capable of moral responsibility.

The level and nature of human oversight should match the stakes and risks of AI decisions. Critical applications like medical diagnosis, criminal justice, and financial decisions warrant more robust oversight than low-stakes applications like product recommendations. Ethical AI frameworks define appropriate oversight levels and ensure humans have sufficient information, time, and authority to exercise meaningful control.

Stakeholder Engagement and Participation

Building ethical AI requires input from diverse stakeholders, especially communities affected by AI systems. Responsible AI development involves affected populations in design decisions, incorporates their values and concerns, and creates channels for ongoing feedback. This participatory approach produces more fair and effective systems while respecting stakeholder autonomy and dignity.

Engagement should begin early in development, not after systems are built. Communities can help identify potential harms, suggest alternative approaches, and define appropriate fairness metrics. Their lived experience provides insights that technical teams alone cannot access. Ethical AI treats affected populations as partners in development, not just end users or test subjects.

Establishing Ethical Guidelines and Governance

Organizations developing or deploying AI should establish clear ethical guidelines and governance structures. These frameworks define ethical principles, set boundaries on acceptable uses, create accountability mechanisms, and provide guidance for ethical dilemmas. Governance structures should include diverse perspectives, independence from commercial pressures, and authority to halt problematic projects.

AI ethics committees or boards can review proposed systems, audit existing deployments, and make recommendations about ethical risks and mitigation strategies. These bodies should include technical experts, ethicists, domain specialists, and community representatives. Their diversity ensures comprehensive evaluation of potential ethical issues from multiple perspectives.

At Secuodsoft, we're an AI-first solution company committed to responsible AI development. We help organizations build AI and machine learning systems that are not only powerful and effective but also ethical and fair. Our development approach integrates bias testing, transparency, and ethical considerations from the earliest stages of project planning through deployment and monitoring. We work with clients to identify potential AI ethics issues in their specific contexts, implement appropriate safeguards, and establish governance frameworks that ensure ongoing accountability. Whether you're developing computer vision systems, natural language processing applications, or integrated AI solutions, we provide the expertise to build technology that aligns with your values and serves all users fairly.

The Role of Regulation in Ethical AI

Governments worldwide are developing regulations to ensure responsible AI. The European Union's AI Act categorizes AI systems by risk level and imposes requirements accordingly. High-risk applications like hiring, credit scoring, and law enforcement face strict transparency, testing, and human oversight requirements. The regulation aims to protect fundamental rights while fostering innovation.

The United States has taken a more sector-specific approach, with agencies like the FTC using existing consumer protection laws to address AI harms. Industry-specific regulations in healthcare, finance, and transportation incorporate AI-specific provisions. This regulatory landscape is evolving rapidly as policymakers balance innovation incentives with protection against AI risks.

Effective AI regulation must be technically informed, flexible enough to accommodate rapid technological change, and strong enough to prevent serious harms. International cooperation is essential, as AI systems cross borders and global companies operate across jurisdictions. Responsible AI development increasingly means compliance with multiple regulatory frameworks.

The Future of AI Ethics and Responsible AI

The field of AI ethics continues evolving as AI capabilities expand and new challenges emerge. Emerging areas include the ethics of artificial general intelligence, the moral status of AI systems themselves, and the governance of AI development trajectories. As AI becomes more capable and autonomous, ethical frameworks must adapt to address unprecedented questions.

The growing recognition of AI ethics' importance has spawned new academic programs, professional certifications, and career paths. Organizations are creating dedicated AI ethics roles and teams. This professionalization of AI ethics reflects its maturation from philosophical speculation to practical necessity for anyone building or deploying AI systems.

Looking forward, responsible AI will likely become a competitive advantage as consumers, employees, and investors increasingly value ethical AI practices. Organizations known for fair, transparent, and accountable AI will build trust and loyalty. Those ignoring AI ethics face reputational damage, regulatory penalties, and loss of public confidence.

Conclusion: Building the Future We Want with Responsible AI

AI ethics and bias aren't obstacles to innovation. They're essential guardrails ensuring AI benefits humanity broadly rather than amplifying existing inequalities or creating new harms. As AI systems become more powerful and autonomous, the ethical stakes grow higher. We cannot afford to treat ethics as an afterthought or optional consideration.

Building responsible AI requires technical excellence, ethical awareness, and genuine commitment to fairness and accountability. It demands that we question assumptions, examine data critically, test rigorously for bias, maintain transparency, and listen to affected communities. These practices don't slow innovation. They make innovation sustainable and trustworthy.

The AI systems we build today will shape society for decades. They'll influence who gets opportunities, who receives care, who gains access to resources, and whose voices are heard. These aren't just technical decisions. They're moral choices about the kind of world we want to create. Ethical AI ensures those choices align with human dignity, equality, and justice.

Every developer, every organization, and every person involved with AI bears responsibility for its ethical development and deployment. Understanding AI ethics and bias, learning from past examples, addressing critical ethics issues, and implementing responsible AI practices aren't optional extras. They're fundamental requirements for anyone working with artificial intelligence.

The future of AI is being written now. By committing to ethical AI development, we can ensure that future is one where technology serves humanity broadly, respects fundamental rights, and creates opportunities for all rather than advantages for few. The question isn't whether we can build responsible AI. It's whether we have the wisdom and courage to do so.

Frequently Asked Questions (FAQ)

AI ethics refers to the moral principles and guidelines governing how artificial intelligence systems are developed, deployed, and used. It's important because AI increasingly makes decisions affecting people's lives, from determining who gets hired or approved for loans to influencing medical diagnoses and criminal justice outcomes. Without ethical frameworks, AI systems can perpetuate discrimination, violate privacy, lack accountability, and cause harm to individuals and society. Ethical AI ensures that as these systems become more powerful, they remain aligned with human values like fairness, justice, transparency, and respect for human rights. As AI touches more aspects of daily life, AI ethics becomes essential for building technology that benefits everyone rather than serving narrow interests or amplifying existing inequalities.

AI bias enters systems through multiple pathways throughout development. The most common source is biased training data that reflects historical discrimination or doesn't represent all populations adequately. If an AI learns from data where certain groups were systematically disadvantaged, it learns to replicate that discrimination. Bias can also stem from how problems are framed, which variables are chosen as inputs, how success is measured, and what proxy metrics are used. Even seemingly neutral technical choices encode values and assumptions that can produce biased outcomes. Additionally, the lack of diversity among AI developers means potential biases may not be recognized during development. AI systems don't create bias from nothing. They amplify and automate biases present in data, design decisions, and development processes, which is why responsible AI development requires actively identifying and mitigating these sources of bias.

Notable AI bias examples include Amazon's hiring algorithm that discriminated against women by learning from historical data showing male-dominated hiring patterns. The COMPAS criminal risk assessment tool exhibited racial bias, incorrectly flagging Black defendants as high risk at twice the rate of white defendants. A healthcare algorithm used nationwide systematically provided less care to Black patients than white patients with identical health needs because it used healthcare costs as a health proxy, and systemic inequalities meant Black patients received less expensive care. Facial recognition systems show dramatically higher error rates for people with darker skin tones, with some commercial systems misidentifying dark-skinned women up to 35% of the time while achieving near-perfect accuracy on white men. These examples demonstrate how AI bias creates real harm across critical domains like employment, justice, healthcare, and security, affecting millions of people's opportunities and wellbeing.

Preventing AI bias requires proactive measures throughout development and deployment. Start with diverse, representative training data that accurately reflects all populations the system will serve, actively correcting historical biases rather than simply using available data. Conduct rigorous bias testing across demographic groups before deployment, measuring not just overall accuracy but fairness metrics that detect disparities. Ensure diverse development teams who can identify potential biases others might miss. Implement transparency and documentation practices that make it possible to audit systems for bias. Maintain meaningful human oversight, especially for high-stakes decisions. Engage affected communities in design processes to understand potential harms and appropriate fairness criteria. Establish ongoing monitoring to detect bias that emerges after deployment. Create clear accountability structures and remediation processes when bias is discovered. Preventing AI bias isn't a one-time checklist but an ongoing commitment requiring technical rigor, ethical awareness, and organizational accountability.

Responsible AI development is an approach to building artificial intelligence systems that prioritizes ethical considerations alongside technical performance. It means actively working to ensure AI is fair, transparent, accountable, and aligned with human values and rights. Responsible AI includes preventing and mitigating bias, protecting privacy and security, maintaining human control over high-stakes decisions, documenting systems clearly, testing rigorously across diverse populations, and engaging stakeholders in design processes. It requires considering potential harms before deployment and establishing governance frameworks that ensure ongoing accountability. Responsible AI treats ethics not as constraints on innovation but as essential requirements for building trustworthy, sustainable technology. Organizations committed to responsible AI establish ethical guidelines, create oversight mechanisms, invest in bias detection and mitigation, maintain transparency about capabilities and limitations, and prioritize long-term societal benefit over short-term competitive advantage. As AI becomes more powerful and consequential, responsible development becomes an existential necessity for the field.